C Windows Form Mouse Events Unity

Posted By admin On 29/12/17

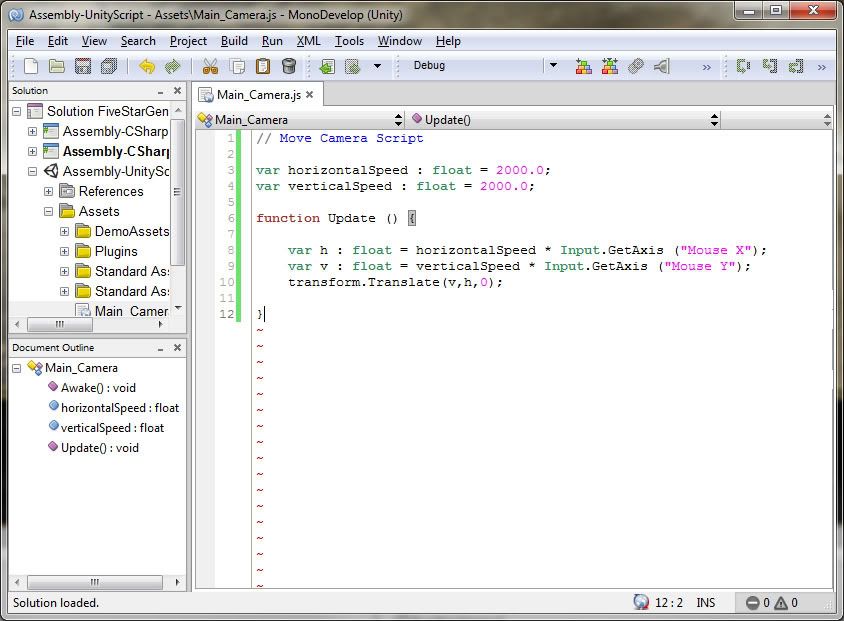

Good evening everyone, I am a new developer and I am currently working on a Windows application. I have to integrate a window within the application that will show a 3D model and is controlled by the Windows application. I am a huge fan of the Unity engine and have worked on it as a hobby of mine. I am just wondering if it is possible to have an instance of a compiled Unity executable within my Windows application environment. So when I run the program, the Unity project will be loaded within the software. Boostspeed Crack Premium. Here is a diagram to show what I mean. I don't need to know how to integrate it, just need to know if such integration is possible.

Dear all, We are new to unity and for a customer project we need to control a unity object with touch from a WPF c# touch application. The object in unity is a small test 3D cube, given by our customer, that we should be able to rotate from a WPF container How can we control that cube from our application? In WPF we have the possibility to have acces to the UNityWeb container as activex control, do you think if we run unity in that web browser we will be able to directly manipulate it with touch? Thnaks for your prompt help which will helpus to move forward as we are stuck here for days now If have trial version of unity for testing this and create a small object with your help in case something need to be setup in unity object for manipulation Help really appreciate regards serge. SetParent WinAPI call ( it is a good idea in that case to launch the unity executable with -popupwindow parameter ) Note that I've done this with the whole parent window area, so I'm not completely sure whether it's possible to occupy only portion of the window ( by passing, say, HWND of a groupbox / panel to the SetParent ) as is depicted in the topic. We've done this and although somewhat cumbersome the Unity exe then runs completely embedded in the parent surface/window. ( although now that I am thinking about it we should have probably rather launch the exe and implement e.g.

Control Mouse position and. Static extern void mouse_event(int dwFlags. Using the System.Windows.Forms.Cursor class to move the mouse.

Fullscreen/resolution logic in the exe itself. ) The Unity exe has then focus so any changes have to be communicated, if required, to the win/form/wfc process and the only way of doing so is via sockets - everything else is safely buried deep inside this custom mono. Click to expand.erm, what exactly does 'need' some modifications? The Unity executable window has HWND like any other Windows application =>can be reparented / assigned to other window ( we run the Unity executable windowed, maybe fullscreen could pose a problem ) If it wouldn't possible, I wouldn't see our.NET/WinForm app running, calling external Unity program and assigning it into prepared winform - which certainly is not the case btw I'm talking about 'classic' WinAPI, not Metro and such, which I don't know, if that's what you meant -- or maybe I'm describing slightly different use case. Click to expand.The scene in our setup is driven by external network data, but the keyboard input definitely works ( we use it to adjust parameters at runtime ) and IIRC mouse too. But you are maybe right that this setup might not support all possible controller/s configurations. Btw for all things concerned - the window in which Unity runs is still the same - only its parent is changed.